“There is no such thing as ‘stone’; there are many different types of stones with different properties and these stones become different through particular modes of engagement.” —Chantal Conneller, An Archaeology of Materials

Abstract

This chapter showcases human engagements with the most primal material of all—earth itself—beginning in the Neolithic period (which began ca. 9000 BCE in the Fertile Crescent), when people relied on domesticated plants and animals for their livelihood. The Neolithic has also been called the Age of Clay because clay and soils were critical materials for many aspects of daily life. A case study of an important Neolithic settlement, Çatalhöyük, demonstrates how people and clay became interdependent on each other, resulting in an “entanglement” that influenced human actions and values. The Neolithic entanglement with clay, multiplied countless times all over the globe, led to significant historical changes in human society that still reverberate today. This case study also provides general insights for understanding the relationships between humans and materials. How people engage with the potential and actualized properties of materials in production processes is key to understanding the historical trajectories of the impacts of materials on societies.

Introduction

How have archaeologists, and philosophers before them, made sense of human history? From the beginning of the discipline in the 19th century, archaeologists focused on the different materials manipulated by humans over time. Thus they began to organize the human past in a logical way—a series of progressive stages—that is still influential today. But it is only much more recently that archaeologists and other scientists have begun to investigate the impacts of specific materials on human societies. It is not an exaggeration to say that human-material interactions changed history. People continue to be shaped by relationships with certain materials that began long ago. This chapter examines human interactions with a humble material—earth in the form of clay—starting over 10,000 years ago.

Thomsen’s Three-Age System

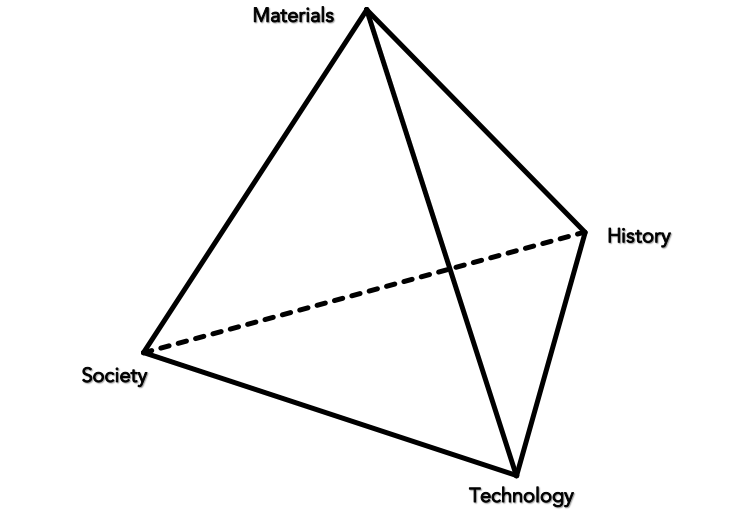

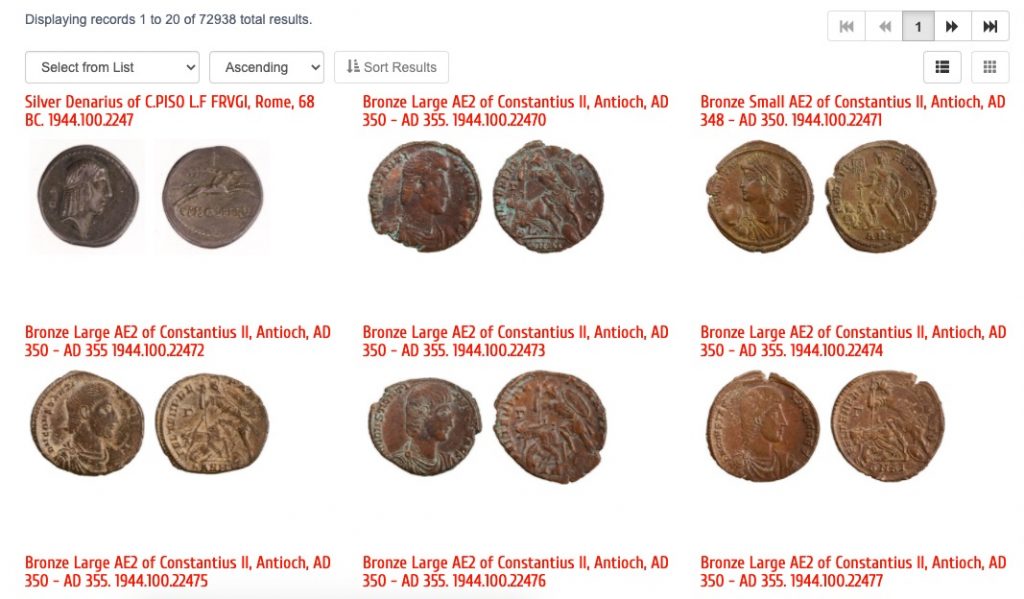

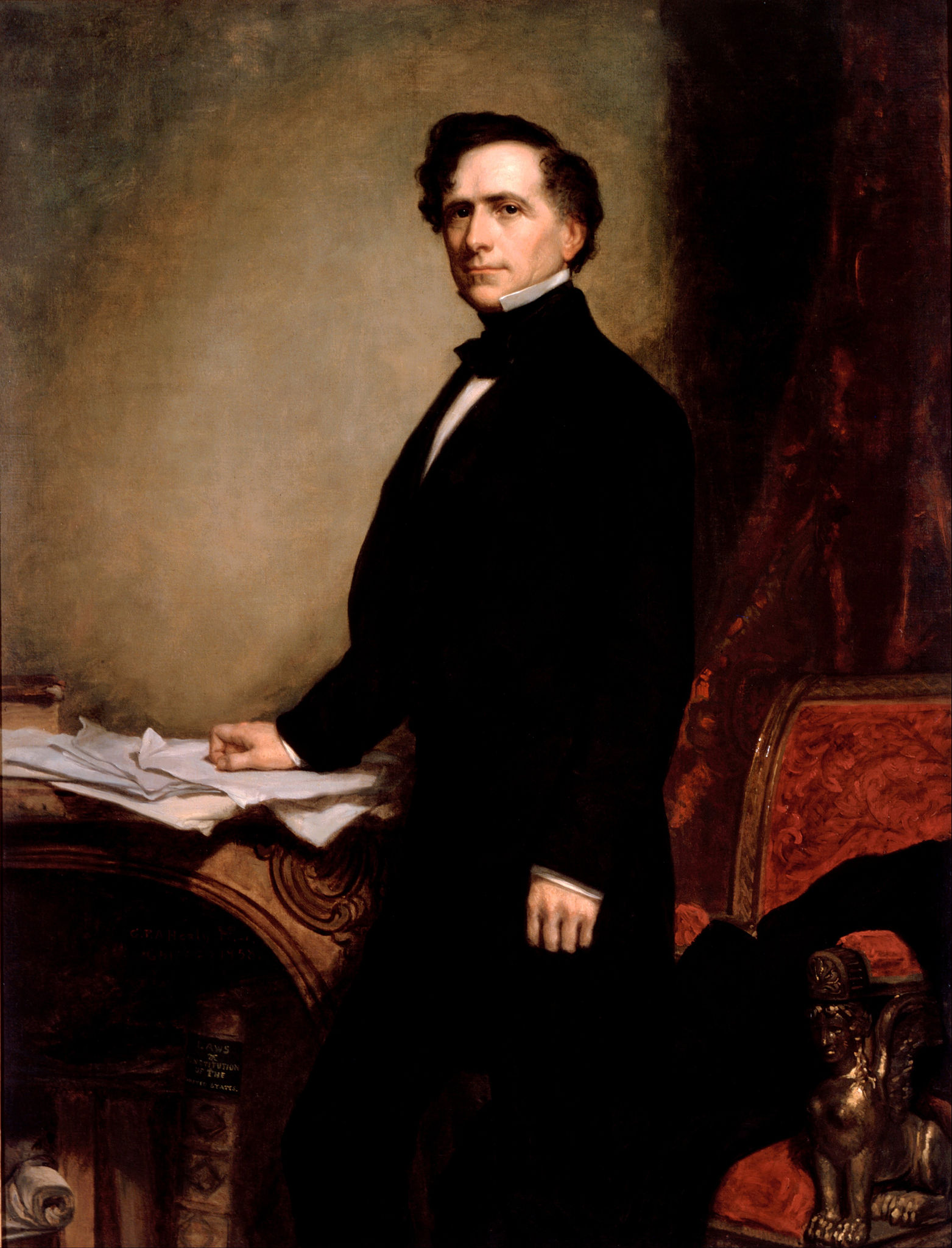

In 1816, Danish antiquarian Christian Jürgensen Thomsen (1788–1865) faced a major challenge. The Danish Royal Commission for the Preservation and Collection of Antiquities had been amassing collections of ancient artifacts from all over the country to house them in what would become the National Museum of Denmark (Figure 2.1). The commission asked Thomsen to organize the various objects for an exhibition to educate Danes regarding their early history. How could he best make sense of them?

Figure 2.1 Thomsen with visitors in the Museum of Northern Antiquities, Denmark, in an 1848 drawing. [

Wikimedia Commons.]

Thomsen decided to organize the artifacts by their raw material, which provided clues to their historical contexts. Nearly 2,000 years earlier, the Roman philosopher Lucretius had speculated that the first humans used stone and wood for implements, and only later developed bronze and then iron (see Curta, “Copper and Bronze”). But this idea had never before been tested.

Thomsen reckoned that ancient people still used stone tools after bronze metal-working appeared, and that they continued to employ bronze artifacts after iron was introduced. But to group objects solely by material was not meaningful to the history of Denmark, because it ignored cultural information about how and when past peoples made and used these objects.

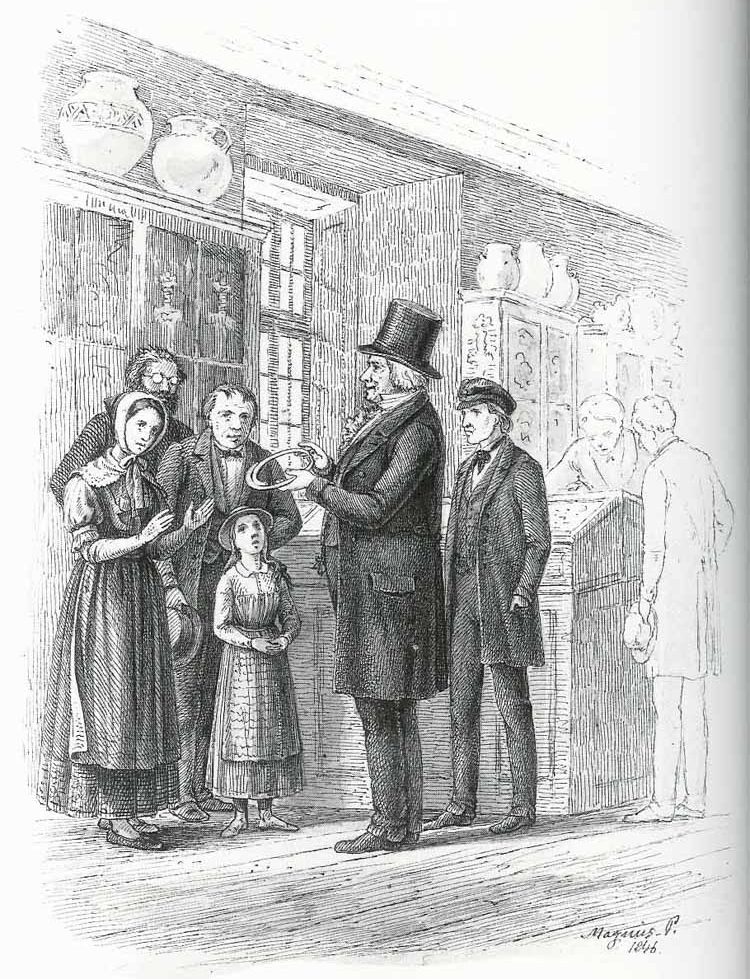

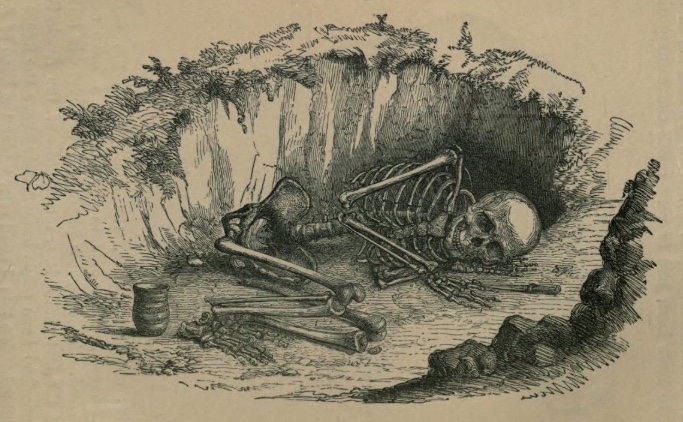

To understand better when objects made of these materials were used, Thomsen focused on artifacts from “closed finds” such as burials and hoards (buried caches of objects). With closed finds he could assume that all the items found together were in use at the same time (Figure 2.2). In this way, he could determine which things were probably contemporaneous and utilized by the same peoples. Thomsen ended up with distinct groupings that, he suggested, formed a sequence in time, proving Lucretius’s early idea with material evidence. He proposed a series of “ages” in early Danish history: an initial period with only stone artifacts (Stone Age), a later period with both bronze and stone tools (Bronze Age), and a final Iron Age with objects of iron, bronze, and other materials.

Figure 2.2 Engraving of early Bronze Age burial in Britain. Visible in the drawing, this “closed find” includes a ceramic beaker, bronze dagger, and a stone projectile point (just above the dagger in the drawing). [Llewellyn Jewitt, from

Grave Mounds and their Contents (London: Groombridge and Sons, 1870), 14.

Internet Archive.]

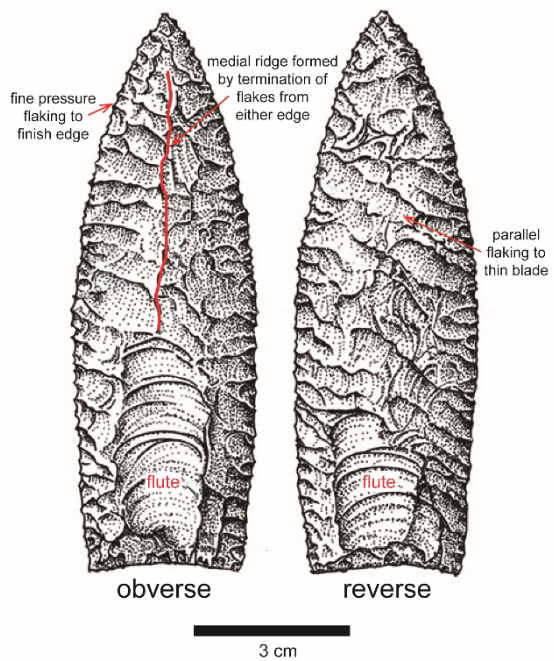

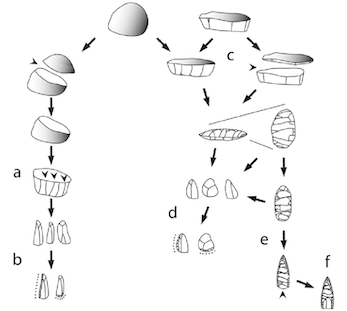

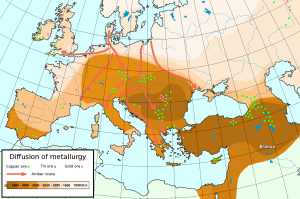

Published in 1836, Thomsen’s innovative chronological scheme energized the developing field of archaeology. His Three-Age System of Stone-Bronze-Iron was later applied to all of Europe, Africa, and Asia, although the “metal ages” do not pertain to the Americas, Australia, or Oceania. The “Stone Age” was subsequently divided into sub-periods, the earliest two being the Paleolithic (Old Stone Age) and Mesolithic (Middle Stone Age). Both are characterized by tools made from the forceful removal of chips (flakes) from stone (see Sassaman, “Ceramics”). The subsequent Neolithic period, or New Stone Age, was distinguished by a new technology for grinding and polishing stones to make implements.

What’s Missing in the Three-Age System?

Thomsen based his artifact groupings on hard, durable objects of some value, intentionally buried in graves or hoards. His chronological scheme thus neglected the soft, perishable, non-grave-worthy materials used by Denmark’s early inhabitants. Our ancestors used many other “earthy” materials that are not represented in the Three-Age System.

Furthermore, there is no sense of how and why certain materials came to be used by earlier peoples at different points in human history. Nor was Thomsen able to articulate how those materials impacted the development of society in advantageous or disadvantageous ways.

This chapter showcases human engagements with a material neglected by Thomsen and Lucretius even though it is the most primal material of all—earth itself—during the Neolithic period. The Neolithic “soil revolution” provides historical background for a case study of the entanglement with clay experienced by the inhabitants of Çatalhöyük, an ancient settlement in modern-day Turkey.

Entanglement is the key idea introduced in this chapter. It refers to the interdependency between humans and things, based on the properties of the materials that things are made of. Entanglement becomes an entrapment that influences human actions and ideas. The Neolithic entanglement with clay, multiplied countless times all over the globe, led to significant historical changes in human society that still reverberate today.

This case study from the deep past also provides a method for analyzing contemporary and future relationships between humans and the materials they depend on. The final section thus draws out some “material lessons” we can use to better understand the impacts of materials on human society.

The “Soil Revolution” and the “Age of Clay”

Changes in the Neolithic Period

Although Thomsen’s Three-Age System is overly simplistic, the division of the Stone Age into earlier (Paleolithic and Mesolithic) and later (Neolithic) components is still useful. However, these terms no longer refer simply to changes in stone tool technology, nor to exact periods of time. Instead, Neolithic now designates the shift from a nomadic food-collecting to a settled food-producing way of life dependent on domesticated plants and/or animals. This gradual transition occurred in different parts of the world at various times, beginning about 9000 BCE in the Fertile Crescent area that stretches from Syria into Iraq and Turkey.

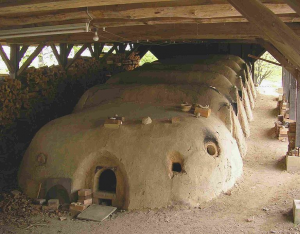

Figure 2.3 Reconstructed Neolithic house interior in Albersdorf, Germany. Note the use of clay or earth for parts of the wall, the hearth/oven, and pottery. [Photo by user Nightflyer (2012), shared under a

CC-BY 3.0 Unported License. Wikimedia Commons.]

Being tied to the land to raise crops or to tend livestock required more durable houses and other structures. Furthermore, most Neolithic peoples developed pottery vessels to store, serve, and sometimes cook foods (Figure 2.3). These changes were so substantial, modifying the course of global history, that this transition was dubbed a Neolithic “Revolution.”

New Engagements with Soil and Clay

Figure 2.4 The great henge (circular ditch and embankment) at Avebury, England, near Stonehenge. Most of the stones from its stone circles have been removed. [Photo by author (2006).]

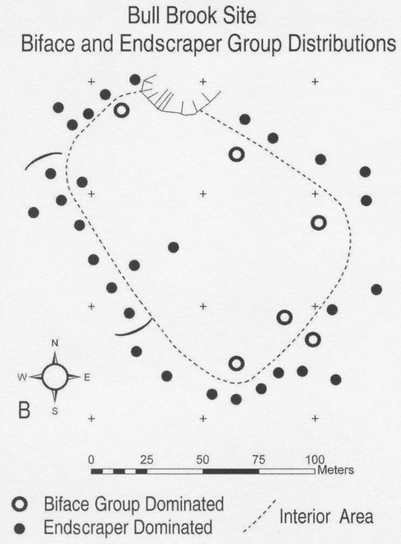

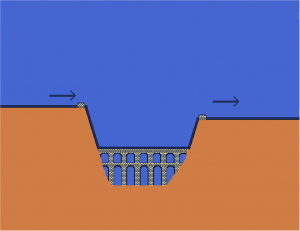

Although the shift from mobile to settled village life varied tremendously where it occurred across the world, there were certain commonalities to the experience. One of them was an increase in practices that opened up the earth’s surface. Excavations were necessary for new forms of architecture and land modification. These included holes for house posts, pits for storage or garbage, “borrow pits” made when removing soil for other purposes, graves for the dead, ponds for livestock, and ditches for drainage, irrigation, or for ritual spaces, such as the circular ditches and embankments (henges) in Great Britain (Figure 2.4).

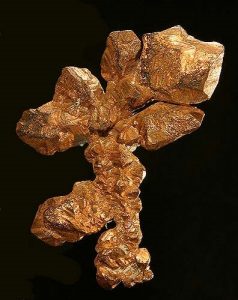

Digging also exposed new earthy materials lying below the surface, such as flint, limestone, and clay, which were utilized in new tool and building technologies. In the late Neolithic people began to mine and process copper and gold. This early metal working marks the Chalcolithic (copper-stone) period, precursor to the Bronze Age (see Curta, Copper and Bronze). Archaeologist Julian Thomas described these actions of digging into and mounding up dirt as a new “set of relations of reciprocity with the earth itself” with new methods for transforming earthy materials. Put another way, Neolithic societies were “soil-based societies” because soil (broadly speaking) is a common denominator in all the major changes of this new way of life (Figure 2.5).

Clay is technically defined as the finest sediments, with grain diameters of less than 1/256th of a millimeter. The high surface area of these plate-like grains makes true clay sticky and plastic. However, archaeologists use a less specific characterization that refers to clay’s plasticity, treating clay as any fine-grained sediments capable of being molded to an internally cohesive form. “Clay” deposits typically consist of both true clays and non-clays, both fine-grained and coarser-grained sediments.

Soil was necessary to grow the crops, to pasture the animals, to erect more durable structures, and to make pottery. According to archaeologist Nicole Boivin, a veritable “Soil Revolution” occurred that has gone unrecognized because we tend to think of soil as the unchanging stuff beneath our feet.

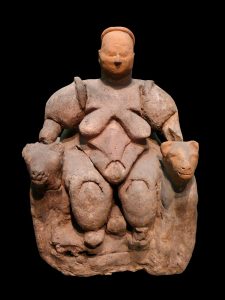

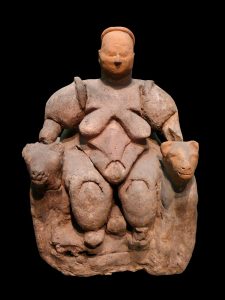

Figure 2.6 Seated “Mother Goddess” flanked by felines. Clay figurine excavated by James Mellaart at Çatalhöyűk in 1961; the head is a restoration. [ca 6000 BCE, Museum of Anatolian Civilizations. Photo by Nevit Dilmen, shared under a

CC BY-SA 3.0 Unported License.

Wikimedia Commons.]

On the contrary, soils underwent dramatic modifications as they were drawn into Neolithic and later technologies.

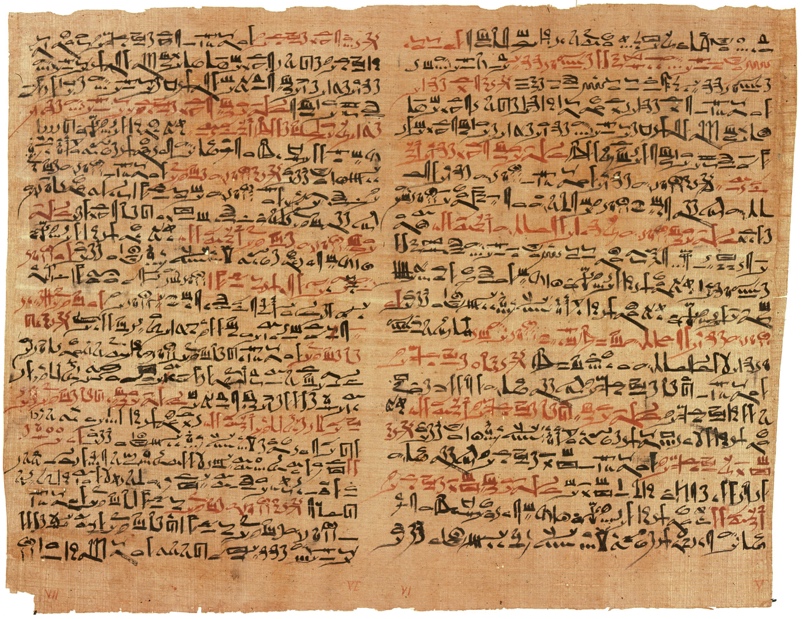

The dominant earthy material for Neolithic peoples was clay. It was used to make all or parts of houses, pottery, figurines, cooking balls, fishnet weights, jewelry, gaming pieces, and many other artifacts (Figure 2.6). In their everyday lives, people were enclosed in clay, manipulated clay, ate from clay, wore clay, and experienced its different forms in close, personal contexts. In addition, clay objects lasted longer than those made of organic materials such as fiber, wood, or bone, and their longevity gave people a new sense of their own enduring histories.

The remarkable increase in material production of clay objects and structures along with emerging transformative clay technologies motivated archaeologist Mirjana Stevanović to insert an “Age of Clay” between the Stone and Bronze Ages.

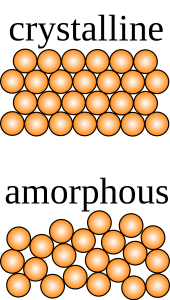

Properties of Clay

Why was clay so important? A major reason, besides its abundance and ease of acquisition, is that clay exhibits the property of plasticity or malleability. Clays are fine-grained sediments that, mixed with the right amount of water, can be formed into a variety of shapes.)

Clay was critical to the development of many innovative transformative technologies of the Neolithic period. These transformations were not merely mechanical—as in the polishing of hard stones to make axes to cut trees and grinders to process grains—but were increasingly structural and chemical.

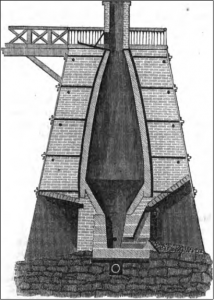

Figure 2.7 Pottery in the backyard of a potting household in Atzompa, Oaxaca. Mexico. The pots in the center are drying; those in the lower left corner have already been fired in the kiln (out of the photo, on the left). [Photo by author (1984).]

Mixing clay with water created structural changes, allowing it to be formed into figurines, bricks, vessels, jewelry, and other objects. Heating and drying those objects—using the sun or with an oven or hearth—effected structural modifications that made them harder and more durable. The innovation of a high-temperature open or closed kiln for firing dried clay objects produced chemical changes, transforming them into ceramics (Figure 2.7; see Sassaman, “Ceramics”).

Disadvantageous Properties

However, Neolithic peoples also had to deal with certain problems of working with clay. Clay is heavy and bulky to move from wherever it is mined from the ground to where it is needed. The potters must then process the mined clay, usually by pounding it into a gritty powder and removing impurities. Adding water to clay makes it heavier still and difficult to maneuver.

Clay objects require water to be formed, but as the water evaporates, they tend to shrink and crack. Temper (a non-plastic material) was typically mixed in with the prepared clay to reduce shrinkage. Various materials served as tempers, including plant fibers, sand, volcanic ash, limestone, shell, and even ground-up ceramics. Clay objects are also fragile; they break easily, and once broken, lose most of their value.

This video (https://www.youtube.com/watch?v=AO0F8y3aNOo) features potters in Botswana’s Kgatleng District.

- How do the women prepare the clay they have mined to make it usable?

- How do know how much water to add to the clay?

- Do they shape the clay into forms such as bases or coils before they make a pot? Do they use a potter’s wheel?

- What kind of fuel do they use to fire (heat) their pottery?

- How would you describe the kiln they use? Is it what you expected?

- How does pottery-making create opportunities for social interactions among women?

For these reasons, adopting a clay-centered technology meant a loss of mobility. Neolithic peoples were less free to move about because of their accumulating possessions and the desire to be close to both clay and water. At the same time, they required more stable settlements in order to consistently control their agricultural fields or livestock. This growing “investment in place,” requiring more permanent settlements, was often accomplished by making more durable residences out of earth and maintaining them across generations.

The Entanglement of Clay at Çatalhöyük: Background

Çatalhöyük was one such long-lived Neolithic settlement. It is also an unusually large site, located in south-central Anatolia, southeast of the modern city of Konya (Figure 2.8) in modern-day Turkey. People lived at Çatalhöyük continuously for over 2,000 years, from 7400–5200 BCE.

Figure 2.8 Map of modern Turkey showing the location of Çatalhöyűk.

Archaeologist Ian Hodder, who has directed excavations at Çatalhöyük since 1993, demonstrated the extent to which its Neolithic inhabitants became entangled with clay throughout their settlement’s long history. His analysis helps to explain the impacts of clay over time on this and other ancient societies. It also illustrates aspects of the relationships between people and the materials they use that can be applied to many other materials in the past and present.

Çatalhöyük

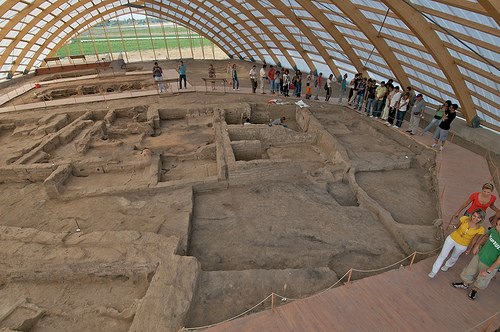

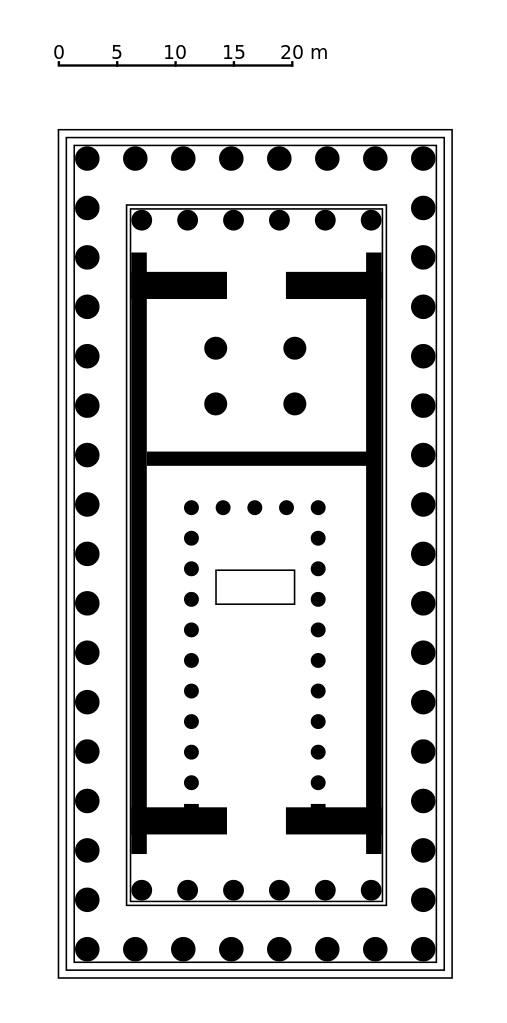

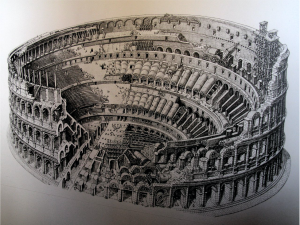

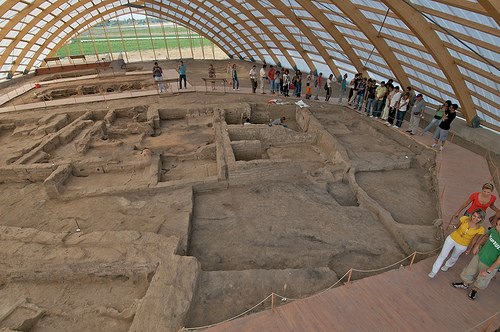

Like many Neolithic sites in the region, Çatalhöyük (“forked-mound”) is a human-made mound (höyük in Turkish) created by the continuous building of clay houses atop one another over many generations (Figure 2.9). Excavations in the late 1950s and 1960s led by archaeologist James Mellaart first brought to light its unusual settlement plan. The town consisted of contiguous multi-room rectangular houses all made of clay, including sun-dried clay bricks (called mudbricks).

Figure 2.9 Satellite photo of Çatalhöyűk. The white areas on the main (east) mound are roofs to protect the north and south excavation areas. Note the modern agricultural fields all around the mounds. [

Google Earth.]

As of yet, archaeologists have not discovered a town center or non-residential structures. The settlement seems to be all houses.

Because houses abutted one another, most people entered and exited their dwellings through a hole in the roof. Streets are absent, and residents used the flattish roofs as both outdoor space and to walk about the settlement (Figure 2.10). Families apparently controlled their own houses across generations, even making their own mudbricks, as no two households utilized the same clay materials in the same way for their residences.

Figure 2.10 Excavations under the north shelter in 2010 revealed adjoining houses. There are single walls within a structure, but two adjacent structures each have their own exterior wall (see center of photo). [From excavation, shared under

CC-BY-NC-SA 2.0 Generic License.

Flickr.]

After a period of 50-100 years, the occupants collapsed the roofs, tore down the house walls to about midway, and filled the remaining cavity to serve as the foundation for a new house atop the old. They did not recycle mudbricks from earlier buildings, so each subsequent construction phase required new bricks.

As a result of these multiple independent building decisions, the mound developed unevenly, with roofs of individual houses at different elevations. Ladders were likely used to go up and down over house roofs to get off the mound to tend agricultural fields and livestock (goats, sheep, and cattle). The people of Çatalhöyük made and lived in a dynamic world constructed of clay, which impacted every major aspect of their lives.

Clay and the Founding of Çatalhöyük

Besides being integral to the form and growth of Çatalhöyük, clay was essential to its location. The settlement was established on the Konya Plain, the bed of the drying Ice-Age Lake Konya. The absence of stone and scarcity of trees here meant that clay would be the primary material for building and for many other needed objects. In Neolithic times, this lakebed was a source of multiple kinds of clay sediments: marls (highly calcareous clays); backswamp (alluvial) clays formed from the deposition of sediment in the lake; reddish clays with silt; colluvium that accumulated at the base of the growing mound; and gritty clays.

However, the scarcity of fuel and the enormous number of clay bricks needed for construction also meant that people relied on the sun to dry the bricks rather than firing them in kilns. This “subceramic” technology extended to other clay objects, including figurines, clay balls, and some pottery.

Çatalhöyük’s first settlers placed their houses in an area of the lakebed with thicker deposits of backswamp clays, rather than on nearby areas elevated by marl deposits. Mudbricks are heavy, especially when wet. By building directly on this chosen clay source, the inhabitants sought to avoid high transport costs. The decision to locate the initial settlement directly on the low-lying, clay-rich areas rather than on natural rises exposed it to flooding. However, as more houses were stacked upon the earliest one, the resulting mound elevated them above the flood zone (Figure 2.11).

A World Heritage Site

Çatalhöyük has many unusual characteristics and provides significant information on the transition to an agricultural way of life. The main mound was continuously occupied between 7400–6200 BCE and experienced eighteen distinct building levels. At its maximum size, it was twenty-one meters tall and extended over thirteen hectares. At different times approximately 3,500–8,000 people lived here, a population size equivalent to a large town or even a small city. A shorter mound, dubbed Çatalhöyük West, was subsequently occupied from 6200–5200 BCE in the Late Neolithic (Chalcolithic) period. Because of its importance, Çatalhöyük was designated a World Heritage Site.

Çatalhöyük is also well known for its mural art, consisting of paintings and low-relief sculptures on clay walls, as well as clay figurines and unique clay supports for erecting cattle horns (bucrania) in the walls of some houses. The Çatalhöyük Research Project, directed by Prof. Ian Hodder of Stanford University, is a 25-year program of excavation, conservation, interpretation, and presentation of findings.

Figure 2.11 South area excavations in 2014 show multiple levels of houses, with the individual bricks visible in some places. Sandbags help conserve the walls against continued slumping and deterioration. Both the alluvial and colluvial sediments were utilized to make bricks and other clay objects later in the sequence of occupation. [Photo by Jason Quinlan, shared under a

CC-BY-NC-SA 2.0 Generic License.

Flickr.]

In addition to mudbricks, backswamp clay was the principal material for clay cooking balls and some early pottery. But as residents continued to dig out the backswamp clay, they depleted this resource in their immediate environs, while also exposing other clays and marls underneath. The whitish marls (highly calcareous clays) beneath the backswamp clay had their own uses, especially to make the plaster that covered and protected the mudbrick walls and served as mortar for the mudbricks (Figure 2.12). Thus, at Çatalhöyük there was “no such thing as clay”; many similar materials were differentiated by their specific properties, locations in the landscape, and uses over time by the inhabitants.

Extracting clays changed the local landscape. Digging for clay disrupted the flow of water in what was a wetland environment, changing the drainage and thus vegetation patterns. A rough estimate of the amount of clay needed for Çatalhöyük’s residential uses during the life of the settlement is an astonishing 675,000 cubic meters! The borrow pits excavated to obtain clay were regularly flooded, filling with additional water-deposited sediments (alluvium). As the mound grew higher, erosional sediments accumulated at its base (colluvium), mixed with artifacts.

In sum, even as clay shaped the Çatalhöyük community, their collective actions also impacted the clay deposits themselves, changing their composition.

Hodder’s Entanglement Model

Drawing upon his excavations at Çatalhöyük, Ian Hodder devised a model of how humans and materials become dependent upon one another, creating an entanglement.

This model is a highly useful method for analyzing the impact of materials on society. It is based on four simple-sounding premises:

- Humans depend on things.

- Things depend on other things.

- Things depend on humans.

- Humans depend on things that depend on humans.

(Note that Hodder takes as axiomatic that humans depend on other humans.) His fourth premise, which builds upon the first three, is the entanglement—a human-thing interdependency. Applying these premises one by one, Hodder analyzed how the inhabitants of Çatalhöyük became entangled with clay, and how that entanglement changed their lives and their history.

Ona Johnson and Karis Eklund made this video, “Welcome to Çatalhöyük,” in 2004 (https://www.youtube.com/watch?v=CNZRzKChn84&t=84s) to introduce the site to visitors.

- What is so special or unique about Çatalhöyük?

- What does the video tell you about the original wetland environment of Çatalhöyük?

- How was Çatalhöyük unlike a modern city? Why were houses so important?

- What did you think of the ancient artworks made by the Neolithic inhabitants of Çatalhöyük?

Humans Depend on Things

Regarding “things” made of clay, the first premise is indisputable. The earliest houses were built directly upon thicker deposits of backswamp clay needed for structures. Residents of Çatalhöyük and other Neolithic sites depended on their houses of mudbrick walls; interior hearths, ovens, and benches made of clay and plastered with marl to help make them waterproof and durable; and many other clay objects (Figure 2.13).

Figure 2.13 Overhead view of a house (Building 56) in the South Area. Note the extensive use of clay for all the house features, including benches, partitions, and the hearth (center left). [Photo from excavation (2006), shared under a

CC-BY-NC-SA 2.0 Generic License.

Flickr.]

As Hodder explained, the residents of Çatalhöyük lived in an intimate, sensory world of clay. Clay dust was ubiquitous and got into their hair, skin, and lungs. It also got into their food because they used heated clay balls to cook their meals, such as animal stews. The dead were buried under the floors (within the earlier filled-in houses), where the clay absorbed the liquid and odors of bodily decay.

Things Depend on Other Things

The second premise is a little more difficult to understand and requires a close examination of the word “thing.” Although this word is so generic it is difficult to agree on a definition, Hodder relied on the influential insights of German philosopher Martin Heidegger. Heidegger observed that the original meaning of “thing” in Germanic languages (including English) was a “gathering” or “assembly.” Things actively gather: they gather their individual properties, other things, processes, people, and places.

There are several ways to understand how clay things gathered or assembled. The clay sediments used to build Çatalhöyük “gathered” (were dependent on) such other things as the hydrogeology of the former Lake Konya and the extraction of the backswamp clay to make the mound. This gathering includes the resulting alluvial clay deposited in the borrow pits and colluvial sediments coalescing at the base of the mound. And all of this movement of clay depended on the actions of people and the force of gravity.

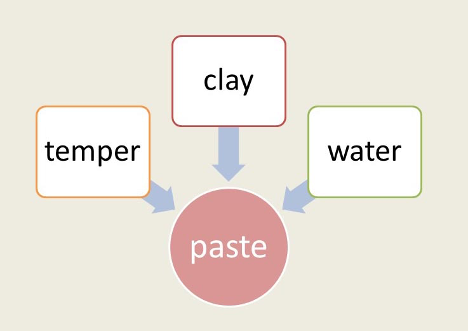

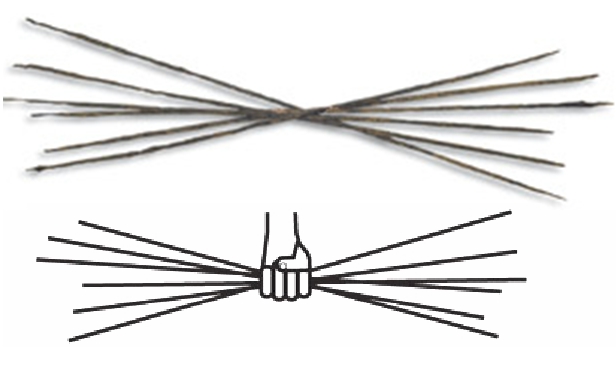

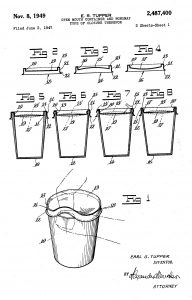

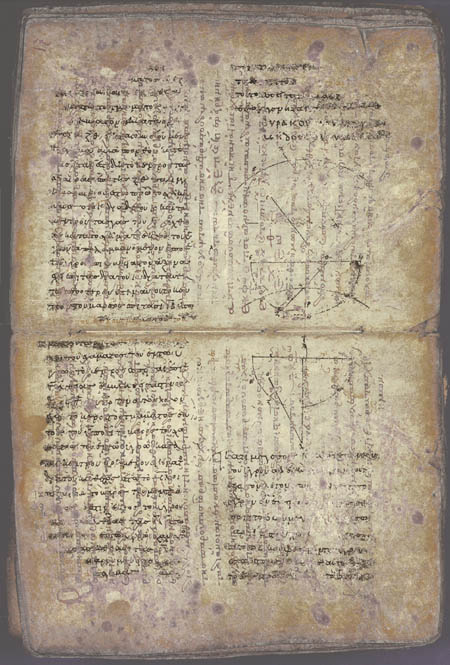

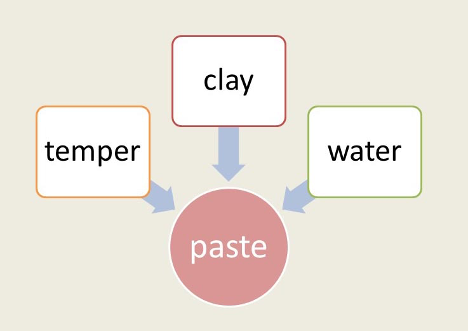

Making a clay object, whether a vessel or a brick, also required gathering component parts, each with its own properties, and correctly assembling them. The parts depended on one another to create the object. Hodder describes this assemblage in detail in making the paste, the prepared clay ready to be molded or modeled into an object (Figure 2.14). The clay itself brings its own qualities to the paste: grain size and shape, chemical composition, shrinkage factor, cohesion, any impurities not previously removed, thermal properties, and so forth. A mineral temper (a non-plastic addition) will have similar properties added to the mix.

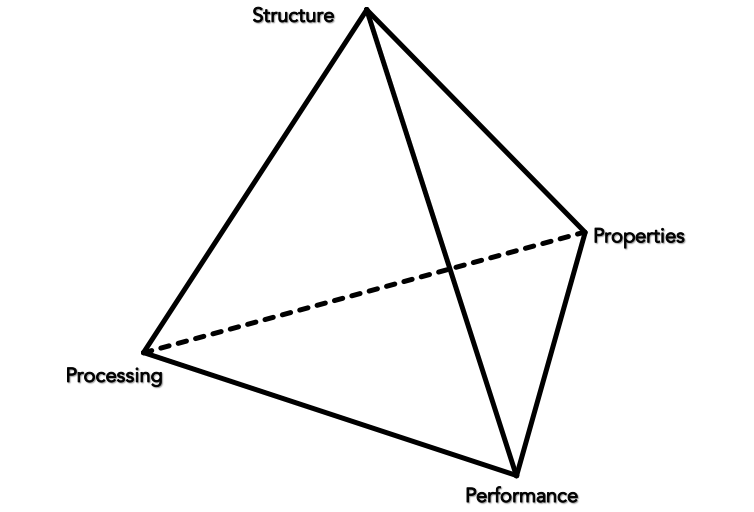

Figure 2.14 Paste as a “thing” is an assembling of the properties of clay, temper, and water. [Adapted by the author from Hodder, Entangled, figure 6.1.]

However, the temper for the early clay artifacts at Çatalhöyük consisted of organic fibers, including wild grasses, straw, and cereal chaff that had to be collected and stored when the grains were harvested. Those activities and materials are part of the gathering process as well. Finally, the water added to mold the clay mix would vary in terms of its proportion to clay and any impurities it might contain. Therefore, making any composite material, such as paste, requires a gathering or assembly.

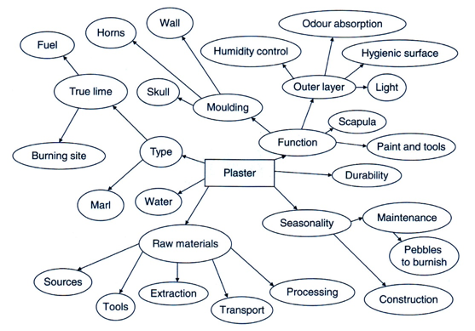

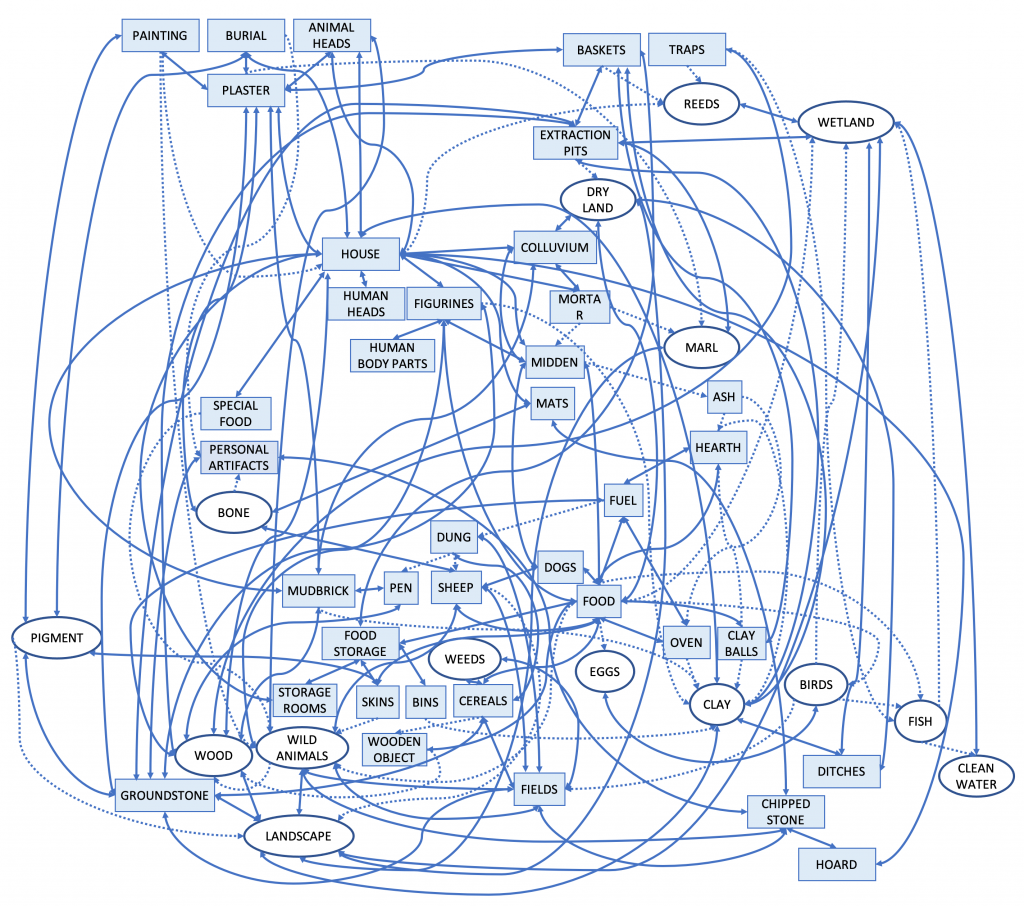

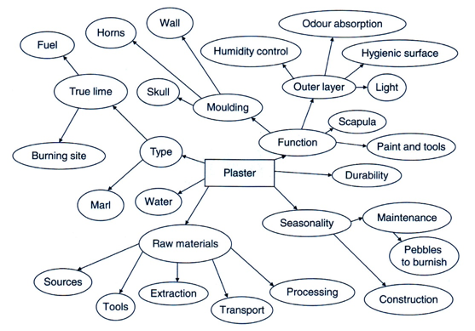

At a higher scale, a thing is an assemblage because other things, people, and places must come together to manufacture, use, repair, or discard it. As an example of this level of gathering, Hodder diagrams (Figure 2.15) how the marl plaster used to cover the mudbrick walls is a thing.

Figure 2.15 This diagram illustrates how clay (marl) plaster (center rectangle) as a “thing” gathers tools, materials, activities, and processes. [From Hodder, Entangled, figure 3.2.]

Plaster assembles the raw material, the marl (calcareous clay), which required certain tools to excavate it from below the backswamp clay deposits and baskets or other containers to transport it to the places for processing. Lime was added to the marl, obtained by acquiring limestone and heating it at a special place (see Eaverly, “Concrete”). Water also had to be carried in containers from its place of origin. Once made, the plaster was applied with certain implements and then burnished to a hard surface with pebbles. This work was dependent on the season of the year and the availability of sufficient laborers. All of these components were “gathered” according to a specific “operational sequence” (see Sassaman, “Ceramics”) to make the plaster to maintain the walls, which required frequent resurfacings due to wear and tear. And consider, of course, that the walls were dependent on the plaster to function properly.

A more straightforward example of how things depend on other things comes from the houses themselves. Families in two adjoining houses came to build their own house walls next to each other rather than share a common outer wall (see Figure 2.10). This was not due just to a concern for property boundaries. Over time the mudbrick walls would slump or crack, and they depended on the adjacent wall to help them stay upright. Thus Hodder concluded, “All things depend on other things along chains of interdependence in which many other actors are involved. . . . Things in their dependence on other things draw things and people together.”

Things Depend on Humans

While things such as mudbricks and pottery depend on humans to come into existence, they also retain that dependency over time because they are unstable. Materials and objects decay, transform, break, fall apart, and sometimes just run out. This dependency is well-illustrated by the mudbrick walls of Çatalhöyük.

As noted, the earliest walls and bricks were made of the backswamp clay. This is a smectitic clay, the name for a category of phyllosilicate minerals that have the high propensity to shrink and swell. In other words, smectitic clays expand quickly when mixed with water, but they shrink a great deal as they dry, and continue to shrink long afterwards. The bricks required tempering with plant material and thick layers of marl mortar to even them out when they were laid because they warped as they dried.

The walls of shrinking mudbrick became more unstable over time, requiring greater human investments to prop them up and keep their surfaces from cracking. As Hodder observed, “The relationships between molecules in the clay produced relationships between people in society at Çatalhöyük as they worked together to solve the problem of collapsing walls.” They tried various solutions, including additional layers of plaster and double-walls for mutual support. They also tried to prop up the houses with wooden posts, although this meant reducing the already low numbers of trees in the area.

Over time, larger and heavier bricks were made to create thicker walls, and sandier clays began to be employed. This last change in clay material was likely related to the over-exploitation of the backswamp clays and the use of the sandier or gritty clay deposits underneath them. New technologies were also introduced to manufacture bricks.

All in all, this greater investment of labor and resources in maintaining existing walls and building new houses limited the time and effort that could have been spent on other activities. As Hodder concluded: “People increasingly got trapped by bricks at Çatalhöyük.” And the bricks are still a trap today! Keeping the exposed 9,000-year-old mudbrick walls from crumbling is a constant chore for archaeologists and conservators, requiring regular injections of chemicals, consolidants, and grouts.

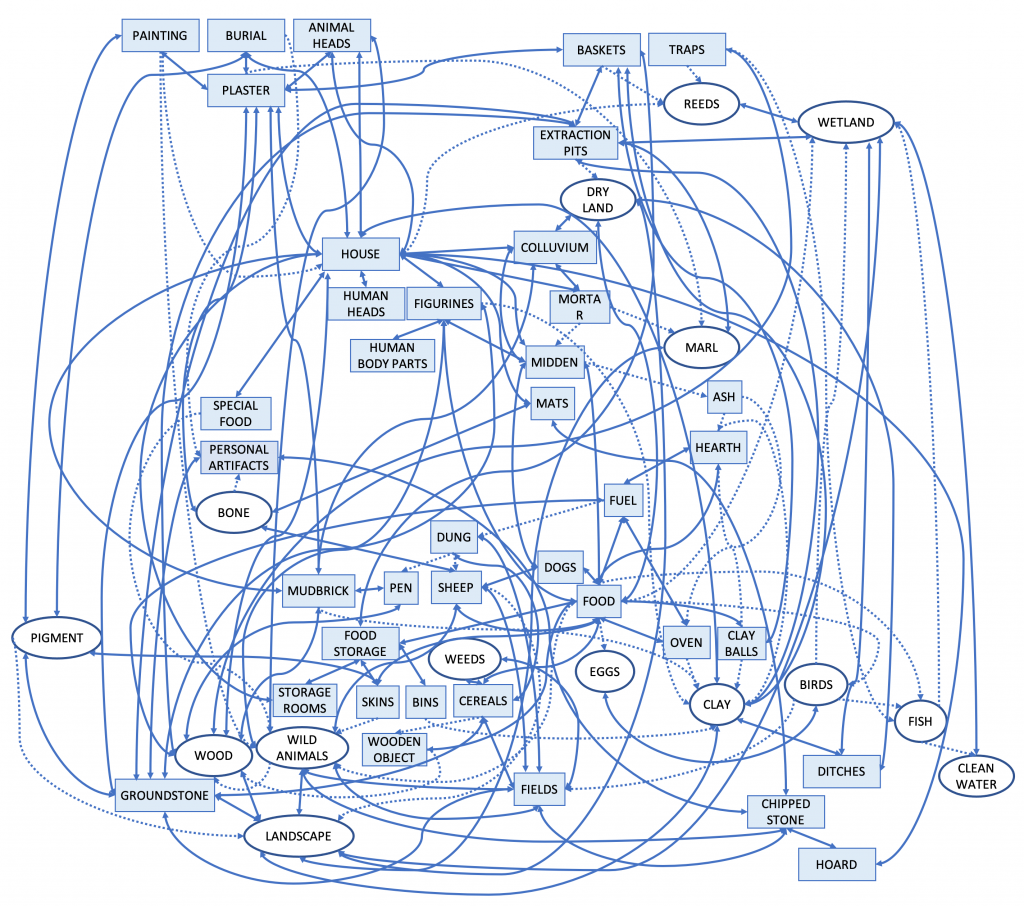

Entanglement: Things Depend on People Who Depend on Things

Entrapment is the historical consequence of entanglement as a self-propelled spiral of consequences. Hodder represents the entanglement of clay as a tanglegram, or interconnected web of dependencies (Figure 2.16). The increasing dependence of Çatalhöyük’s residents on the clay for their structures required ever greater investment in maintaining and repairing them. As a result, they changed their activities, their environment (as they dug up the clay), and their social relations. This cascade of consequences well illustrates how an entanglement traps people into doing some things and limits their abilities to engage in alternatives.

Figure 2.16 A “tanglegram” graphically shows the interdependencies of people and things centered on clay (clay is the oval in the lower right corner, connected to “oven,” “hearth,” and “mudbrick”). Click on the image to inspect these relationships more closely. [From Hodder, Entangled, figure 9.2.]

Entanglement and Social Change

The entanglement of Çatalhöyük’s residents with their mudbrick houses reveals how impossible it would have been for them to change basic architectural materials or construction technology. They were far too invested in their current practices and material dependencies to abandon them.

Entanglement thus provides a framework for understanding how people undergo societal changes—or alternatively, how and why they attempt to prevent change from happening. Hodder’s thesis is that the entanglements of humans and things create a historical trajectory that influences the success or failure of specific social and cultural traits. Because of the entrapment caused by one or more entanglements, people are generally unable to adopt a new material or technology, or cannot realize its benefits, unless it fits into an existing technology and labor regime.

From Cooking Balls to Cooking Pots

A good example of this latter scenario from Hodder’s case study is the gradual shift from clay cooking balls to cooking pots.

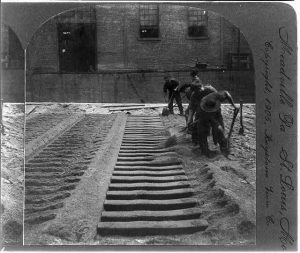

Archaeologists uncovered massive numbers of clay balls from the lower, earlier levels of occupation (Figure 2.17). Many of them were likely used to cook food, as this is a common technology found at equivalent time periods elsewhere in the world.

The cook would heat the balls in the house’s hearth and then transfer them, probably with stick-tongs, to containers. These were likely clay-lined baskets that held water, bits of meat (usually sheep or goat), and other foods. However, the balls quickly lost their heat in the water and had to be put back on the hearth. Imagine the cook in every family carefully monitoring the movement of several balls back and forth from fire to basket for each cooked meal, making this a tedious and labor-intensive daily task.

The early balls were made of the same fiber-tempered backswamp clays as the mudbricks. And the same paste was used to make the earliest clay vessels, appearing around 7000 BCE. However, this fiber-tempered pottery was unsuitable for cooking, so these early pots probably functioned to serve food or drink. As such they did not directly modify the entanglement with cooking balls.

Nevertheless, the use of clays was changing. Digging for the siltier backswamp clays exposed underlying sandy clays. These clays did not require the addition of organic temper, and they were more efficient at heat-transfer than the fiber-tempered pastes. By about 6600 BCE, the clay balls started to diminish in frequency as larger, thinner, sandier clay pots appeared. These typically show exterior smudging, which indicates they were placed directly on a hearth as cookpots (Figure 2.18).

Cookpot Consequences

Cooking food in a pot frees the cook from having to constantly reheat the clay balls to do other tasks. This change in cooking technology modified the scheduling of labor for domestic activities. It would have transformed gender relations and the division of labor within the household, assuming that women and girls were in charge of food preparation. Ceramic cooking vessels also required more skill, investment of labor, and new resources, including non-local clays and fuel for firing pottery (see Sassaman, “Ceramics”).

Thus, one form of the entanglement of clay gradually replaced another over several centuries, with profound reverberations for Çatalhöyük society. It was at this same period of transition that the settlement reached its greatest extent and was most densely packed with houses, now made of the larger, sandier mudbricks.

Material Lessons

The entanglement of clay at Neolithic Çatalhöyük illustrates more general insights for understanding the relationships between humans and materials, and the impacts of materials on society. These include how people engage with properties of materials in production processes, the critical difference between potential and actualized properties, and the recognition that some properties are advantageous while others are disadvantageous. In treating materials as bundles of properties, the notion of a “thing” as gathering or assembling is again revealed as a useful way to comprehend the interactions between people and materials.

Materials? Or Properties?

A long-standing bias implicit in Thomsen’s Three-Age System, which introduced this chapter, is the notion that stone, bronze, and iron are homogeneous material categories that peoples throughout time and space perceived in an equivalent manner. That is, his scheme narrows our attention to materials as seemingly defined strictly by certain natural, essential qualities. In criticizing this bias, archaeologist Chantal Conneller argues to the contrary that people engage with the properties of materials, not with some universally recognized substance in nature (Figure 2.19).

Figure 2.19 Copper knife, spearpoints, awls, and spade, from the “Old Copper Complex” of the western Great Lakes, Late Archaic (pre-farming) period, 3000–1000 BCE, in the Wisconsin Historical Museum. [Photo by user Daderot (2013), shared under a CC0 license.

Wikimedia Commons.]

Importantly, specific properties of a material will actually vary depending on human experiences with it and with the other materials and objects brought into relationships with it. These relationships include making comparisons and contrasts between materials—how is clay like or unlike stone (or metal)? They also more literally refer to physically combining materials (e.g., clay and water) or manipulating them with tools (e.g., polishing dried clay objects such as mortar or pots to harden the surface).

Thus Conneller can assert in the above epigraph that “there is no such thing as ‘stone’.” Instead, a variety of materials may be lumped together at different times and in variable situations by the word “stone.” Alternatively, materials we would treat as all “stone”—running the gamut from talc to diamond—might be distinguished as different substances by other peoples.

“Making”

Materials, and the objects made of them, come “bundled” with multiple potential properties. “Making”—which includes “unmaking”—is an umbrella term introduced by anthropologist Tim Ingold to encompass the production processes by which people engage with the bundled properties of materials as part of the various projects they undertake. In some cases, properties are known and are drawn into strategic, intentional plans. In other cases, they emerge as unintended consequences of human practices. Thus, what a material is in the technical jargon of a modern scientist is not as relevant to those who use it as what it does in particular situations (Figure 2.20).

Because situations will vary, what a material does is subject to change. This means that any material should be treated as mutable, variable, and dynamic—not inert, fixed, and static. To comprehend the impact of materials on society we must attend to the activation of the properties of materials in human interactions with them, and also to the historical consequences of those interactions, as the Çatalhöyük case study demonstrates so well.

Potential and Actual Properties

In the process of making, some of the potential properties of materials are actualized out of that practical experience. However, other properties are not, or not immediately so, and remain virtual or latent, unrecognized or unvalued by people. Some properties may emerge as a consequence of physical processes, such as rust or decay, which are dependent on certain environmental circumstances (In Figure 2.19, the copper has oxidized, turning the surface green.). At Çatalhöyük, only the passage of time revealed the continued shrinkage of the smectitic mudbricks well after they were first sundried.

The actualization of potential properties may also result from transformations that reveal hitherto unrealized effects. For example, we consider clay to be useful because it (1) is easily molded into shape, and (2) can be made into hard and durable objects. However, these potential properties of clay emerge only through the application of a certain amount of water in the first case (Figure 2.20), and additional pyrotechnologies in the second.

In many other instances, the potential properties of materials remain latent because they are not relevant to human projects (making). For example, ancient peoples used iron ores such as hematite and ilmenite as a source of red and yellow pigment, or they polished the minerals to make mirrors. All of this happened long before iron-working was invented or introduced. Thus, “material reality is teeming with virtual or potential qualities or properties which never get actualized.” Making is what brings out these potentials. To understand a material requires knowing the history of how its various properties emerged as a result of the changing situations of human encounters with it.

Figure 2.20 Properties of materials are engaged in their making or using, as here in the case of a potter at work in Jaura, Madhya Pradesh, India. Note the bowl of water kept next to the wheel. [Photo by user Yann (2009), shared under a

CC-BY-SA 3.0 Unported License.

Wikimedia Commons.]

Significantly, actualizing and realizing formerly unobserved or unimportant virtual properties is a source of innovation. For example, humans used clay for millennia in many ways other than by firing it at high temperatures to bring about its potential to make hard, durable, waterproof vessels. Once that technology (ceramic pottery) emerged, it changed the courses of human histories. At Çatalhöyük the innovation of clay cooking vessels (Figure 2.18)—brought about by actualizing sandy clay’s potential properties—resulted in major social changes.

Affordances and Constraints

Although materials are teeming with properties, not all of them prove advantageous to whatever human projects they are brought into. Going back to the fired clay (ceramic) example, its durability is usually considered a desirable result of that pyrotechnology, but ceramics are also brittle—they break easily.

An analytical concept for differentiating desirable properties from undesirable or unrealized properties comes from the work of ecological psychologist James Gibson. He devised the term affordance to refer to the recognized potential properties—for good or ill—for a particular set of actions in a certain situation or environment. Subsequent researchers have modified this term to distinguish advantageous properties—such as the durability of ceramics or the thermal properties of clay cooking balls—from those that create constraints on human action. Examples of constraints are the fragility of pottery that mandates careful handling, and the heavy weight of wet mudbricks that increases transport costs.

Affordances as recognized beneficial properties play a disproportionate role in the manufacture or use of objects, but they are always dependent on their context. This means that affordances must be readily apparent to the humans involved in that context or situation. And because humans do not act in isolation but rather in cooperation with others, affordances have a social aspect. Not everyone will agree on whether properties are advantageous or not, so any material or object affordance may require social negotiation.

Backswamp Mudbricks: Affordance or Constraint?

We can only imagine the discussions and disagreements to establish the first houses of what would become the höyük now called Çatal. Should the pioneer settlers erect their mudbrick dwellings directly on the deposits of backswamp clay, which would put them at risk of flooding? Or, should they build on the higher natural rises, taking them out of the flood zone but at a greater distance from the backswamp clays preferred for bricks?

We know the answer only in historical retrospect. Note that in this example, affordances include the location and abundance of backswamp clay. They are not limited to some intrinsic properties of clay as scientifically defined, because affordances are situational.

Thus affordances are produced or become evident out of human interactions (making) with materials in a particular context. This means that affordances are dynamic or changeable because humans, materials, and situations will vary in time and space. Archaeologist Chris Doherty argued that the diversity of clays available at Çatalhöyük seems obvious to us today, but that affordance was not realized until the inhabitants began removing the backswamp clay. In so doing, they disrupted the natural environment, changing the affordances of the original landscape. The concept of affordance is another reason to avoid treating materials as stable, universally defined categories.

Things as Assemblages

In similar fashion to Martin Heidegger’s dynamic and mutable conception of a “thing,” other 20th-century philosophers have referred to materials or objects as “assemblages” or “networks.” Individual materials are assemblages of their particular potential properties. For example, at Çatalhöyük backswamp clay, marls, and sandy clays bundle different properties.

The fluid nature of materials, with their individual histories of potential and actualized properties, as well as the constant negotiation of those properties in social projects, impact the objects made from them. Objects are “bundles” of different materials brought together in a certain way, their properties emerging from dynamic and situational human interactions. Although we tend to view objects as solid and stable, they are just as changeable as the materials that compose them. The mudbricks that the residents of Çatalhöyük depended on for 2,000 years exemplify this instability.

Objects as assemblages are unstable in another way. They do not naturally endure in terms of their original networks of associations or meanings, even though some of their physical components may persist. The people of Çatalhöyük regularly razed their houses, transforming them into platforms for subsequent dwellings on top. In some cases these former-houses-as-foundations became places of the dead, with new meanings and values. Thus the mound endures into the present some 9,000 years after it was started; yet, as a network of things, people, and values, it was constantly changing (see Figure 2.11).

The users of objects are generally aware of the inevitable changes they undergo. The Çatalhöyük data reveal how much the people labored, sometimes to an extraordinary degree, to stabilize mutable objects and try to maintain the networks in which the objects participated. These efforts indicate the interdependence of people and materials, and the assembled objects made from them, and how these dependencies played out over thousands of years. Appreciating this complicated history is important to more fully comprehending the impact of materials on society.

Conclusion: Entanglement and its Consequences

The entanglement of clay at Neolithic Çatalhöyük thus provides two important lessons for understanding the impact of materials on societies. The first is that entanglement of materials is a historical process, meaning it has material and social consequences that play out over time. The entanglement begins as materials are deployed to meet human needs and desires. Clay was essential for many daily life-sustaining activities. However, at Çatalhöyük there was no such thing as “clay.” The inhabitants differentially utilized the multiple clay-bearing deposits, both in the Çatalhöyük vicinity and from outside the area, according to their particular perceived properties and the contexts of their extraction, transport, processing, and use or reuse.

These clays, and the non-clay materials with which they were naturally or humanly assembled, had their own properties. Some properties were actualized, while potential others remained latent or virtual. Some properties were advantageous to human projects. Besides being abundant and easy to acquire, clay could be formed into multiple, relatively durable objects with a minimum of skill or tools, and could dry in the natural heat of the sun. Other properties posed constraints, such as the weight of wet clay and the high shrinkage rate of the smectitic clay. Both actualized and virtual, advantageous and disadvantageous properties, in their proper contexts, must be thoroughly accounted for in assessing the impact of clay on the Neolithic societies that were ancestral to our own.

This case study further reveals clay objects as assemblages, “things” that participated in assembling higher order things. They gathered different materials, people, processes, and places in their making and unmaking. Things depended on other things and they depended on the people who depended on them.

The second lesson is that entanglement is a historical process whereby human actions are intertwined over time with physical forces. The latter include decay, degradation, corrosion, transformation, wearing out, and running out of materials. When things start to fall apart, the usual reaction is to fix them or find equivalent replacements because entanglement is an entrapment.

This means that all these intertwined processes play a central role in social change. New materials or innovated actualized properties of existing materials are selected for use only if they fit within the existing entanglements. Otherwise they may be ignored. Cook pots at Çatalhöyük had to fit into the existing technology of cooking with clay and making clay vessels, together with the gradual substitution of sandier clays for the siltier backswamp clay.

Through the long lens of archaeology, we can begin to understand the consequences of entanglement at Çatalhöyük. Exactly the same processes are occurring in our lives today, constraining our alternative futures. However, because we are “trapped” by our entanglements, with many new materials now, it is difficult to apprehend how much we are dependent on things that depend on us. Even when we recognize our interdependencies with materials, it is a challenge to overcome them if our entanglements hold us back and prevent us from adopting new materials or alternative technologies for societal needs.

- Select an “earthy” material that is critical to our modern society (e.g., precious and utilitarian metals, fossil fuels, rare earths). Using the four premises of the entanglement model, explain in detail our interdependencies with that material. How can we potentially escape the entrapment of the entanglement of this material?

- Pick another “earthy” material and explain which of its properties are “affordances” and which are “constraints.” Remember that affordances and constraints are context-dependent and not always inherent in the material itself, so you must specify the context or situation. Were the constraining properties known at the time objects of that material were made or first used? What about potential affordances?

- Understanding a “thing” as an assembly or gathering is important to comprehending new approaches to how humans use materials and to Hodder’s entanglement model. Using Figure 2.15 as an example, diagram the clay cooking ball as a “thing” in the early history of Çatalhöyük. Consider how it assembled other substances, people, places, tools, and processes as it was made and as it was used.

- Clay at Çatalhöyük was used to make primarily “subceramic” (unfired) objects that were dried in the heat of the sun. The properties of ceramics are generally considered to be superior to those of unfired clay, yet unfired clay does have important advantages or affordances. What were the specific affordances of unfired clay for the people of Çatalhöyük? What purposes continue to be served by unfired clay materials in our modern society?

Key Terms

entanglement

Neolithic

Soil Revolution

clay

Age of Clay

plasticity

temper

thing

paste

tanglegram

making

potential properties

actualized

affordance

constraints

Author Biography

Susan D. Gillespie is Professor of Anthropology at the University of Florida. She received her PhD in Anthropology from the University of Illinois at Urbana-Champaign in 1983, specializing in the archaeology and ethnohistory of Mesoamerica. Dr. Gillespie has directed archaeological projects in the states of Oaxaca and Veracruz, Mexico, investigating the rise of complex society in the Formative Period (ca. 1500–500 BCE). She is the author of The Aztec Kings: The Construction of Rulership in Mexica History (Univ. of Arizona Press, 1989), awarded the 1990 Erminie Wheeler-Voegelin Prize by the American Society for Ethnohistory. She co-edited Things in Motion: Object Itineraries in Anthropological Practice, with R. A. Joyce (School of Advanced Research Press, 2015), Archaeology Is Anthropology, with D. L. Nichols (Archeological Papers of the American Anthropological Association No. 13, 2003), and Beyond Kinship: Social and Material Reproduction in House Societies, with R. A. Joyce (Univ. of Pennsylvania Press, 2000).

Nicole Boivin, “Geoarchaeology and the Goddess Laksmi: Rajasthani Insights into Geoarchaeological Methods and Prehistoric Soil Use,” in

Soils, Stones and Symbols: Cultural Perceptions of the Mineral World, eds. Nicole Boivin and Mary Ann Owoc (London: Univ. College London Press, 2004), 174, 181,

http://www.worldcat.org/oclc/56911728.

Chris Doherty, “Sourcing Çatalhöyük’s Clays,” in Hodder, ed., Substantive Technologies at Çatalhöyük, 51.

Boivin, "“Geoarchaeology and the Goddess Laksmi," 177.

Boivin, 178.

Serena Love, “An Archaeology of Mudbrick Houses from Çatalhöyük,” in Hodder, ed., Substantive Technologies at Çatalhöyük, 96; Burcu Tung, “Building with Mud: An Analysis of Architectural Materials at Çatalhöyük,” in Hodder, ed., Substantive Technologies at Çatalhöyük, 78.

This section draws on the analysis of Çatalhöyük’s clay sources and how they were used by its inhabitants, conducted by archaeologist Chris Doherty; see Doherty, “Sourcing Çatalhöyük’s Clays.”

The premises of Hodder’s model are best explained in Hodder, “Human-Thing Entanglement,” and Hodder, Entangled.

Martin Heidegger (1889–1976) was a leader in the 20th-century philosophy of Continental Phenomenology. His influential essay, “The Thing” (

Das Ding) was published in English in Heidegger,

Poetry, Language, Thought, trans. A. Hofstadter (London: Harper, 1971), 165–82,

http://www.worldcat.org/oclc/1070903602.

For tempering of the mudbricks, see Hodder, Entangled, 152; Mirjana Stevanović, “New Discoveries in House Construction at Çatalhöyük,” in Hodder, ed., Substantive Technologies at Çatalhöyük, 111; and Tung, “Building with Mud,” in Hodder, ed., Substantive Technologies at Çatalhöyük, 79.

Hodder, “Human-Thing Entanglement,” 157.

Doherty, “Sourcing Çatalhöyük’s Clays," 64; Hodder, “Human-Thing Entanglement,” 160–61; Hodder, Entangled, 65–67.

Hodder, Entangled, 66.

Hodder, Entangled, 67.

Hodder, Entangled, 65.

Hodder, Entangled, 152–56.

Doherty, “Sourcing Çatalhöyük’s Clays,” 65.

Hodder, Entangled, 153–54.

Conneller, An Archaeology of Materials, 5–8.

Conneller, An Archaeology of Materials, 19.

This is the distinction 20th-century philosophers have made between potential and actual (Alfred North Whitehead, 1861–1947), or virtual and actual (Gilles Deleuze, 1925–1995) properties. See Gavin Lucas,

Understanding the Archaeological Record (Cambridge: Cambridge Univ. Press, 2012), 167,

http://www.worldcat.org/oclc/1162097299.

Lucas, Understanding the Archaeological Record, 167.

Tim Ingold, “Toward an Ecology of Materials." Annual Review of Anthropology 41 (2012): 434–35.

Lucas, Understanding the Archaeological Record, 167.

James J. Gibson (1904–1949) was a leader in the psychology of visual perception. See Gibson,

The Ecological Approach to Visual Perception (Boston: Houghton Mifflin, 1979),

http://www.worldcat.org/oclc/1000427293. For revisions of his ideas see Carl Knappett, “The Affordances of Things: a Post-Gibsonian Perspective on the Relationality of Mind and Matter,” in

Rethinking Materiality: The Engagement of Mind with the Material World, eds. Elizabeth DeMarrais, Chris Gosden, and Colin Renfrew (Cambridge: McDonald Institute for Archaeological Research, Univ. of Cambridge, 2004), 43–51,

http://www.worldcat.org/oclc/60740603. See also Hodder,

Entangled, 48–50.

Knappett, 46; see also Conneller, An Archaeology of Materials, 1; Ingold, “Toward an Ecology of Materials,” 435.

Doherty, “Sourcing Çatalhöyük’s Clays,” 65–66.

Lucas,

Understanding the Archaeological Record, 188; Ingold, “Toward an Ecology of Materials”; Bruno Latour,

Reassembling the Social: An Introduction to Actor-Network Theory (Oxford: Oxford Univ. Press, 2005),

http://www.worldcat.org/oclc/1156912903.